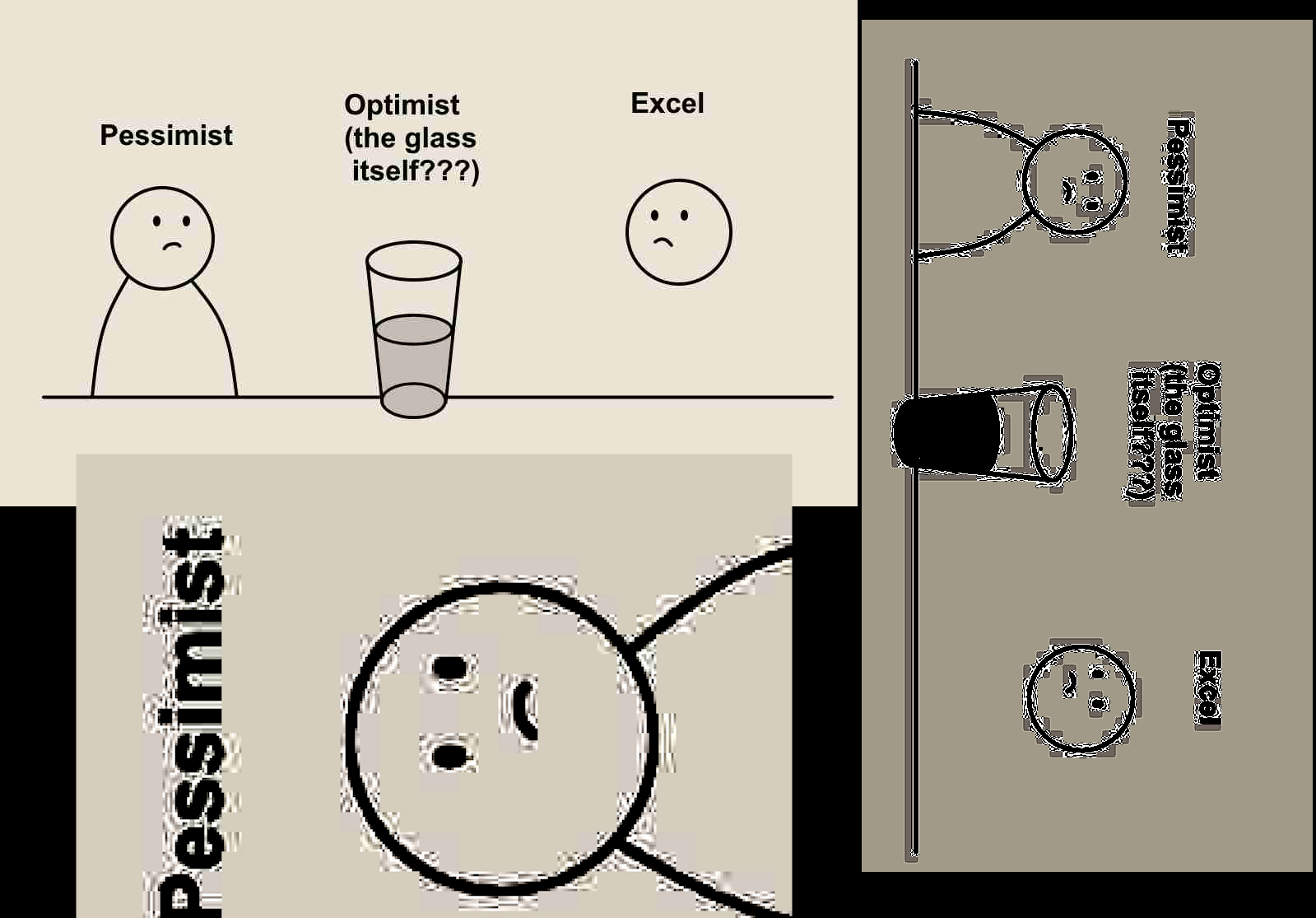

Both guys have an arm that melds into the surface that the glass is sitting on.

Nah the arms are in front of the railing.

The “optimist” is the glass.

So what it’s visually balanced. I would shy away from reading surrealist meaning into it but it’s not like humans never make that kind of choice.

The plain fact of the matter is that nowadays it’s often simply impossible to tell, and the people who say “they can always tell” probably never even tried to draw hands or they could distinguish twelve-fingered monstrosities from an artist breaking their pencil in frustration and keeping the resulting line because it’s closer to passing than anything they ever drew before.

I agree that the “arm things” are wrong, as it’s pretty clearly just an ‘artistic choice’ that a human could very much do.

But that said these images are 100% provable to be AI. If you haven’t built up the intuition that immediately tells you it’s AI (it’s fair, most people don’t have unlimited time for looking at AI images), these still have the trademark “subtle texture in flat colors” that basically never shows up in human-made digital art. The blacks aren’t actually perfectly black, but have random noise, and the background color isn’t perfectly uniform, but has random noise.

This is not visible to the human eye but it can be detected with tools, and it’s an artifact caused by how (I believe diffusion) models work

Not using plain RGB black and white isn’t a new thing, neither is randomising. Digital artists might rather go with a uniform watercolour-like background to generate some framing instead of an actual full background but, meh. It’s not a smoking gun by far.

The one argument that does make me think this is AI was someone saying “Yeah the new ChatGPT tends to use that exact colour combination and font”. Could still be a human artist imitating ChatGPT but preponderance of evidence.

I can generally spot SD and SDXL generations but on the flipside I know what I’d need to do to obscure the fact that they were used. The main issue with the bulk AI generations I see floating around isn’t that they’re AI generated, it’s that they were generated by people with not even a hint of an artistic eye. Or vision.

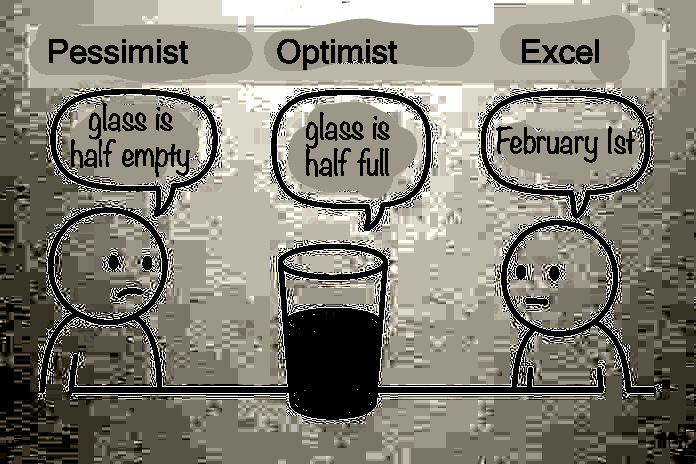

But that doesn’t really matter in this case as this work isn’t about lines on screen, those are just a mechanism to convey a joke about Excel. Could have worked in textual format, the artistry likes with the idea, not in the drawing, or imitation thereof.

The thing missing here is that usually when you do texture, you want to make it visible. The AI ‘watercolor’ is usually extremely subtle, only affecting the 1-2 least significant bits of the color, to the point even with a massive contrast increase it’s hard to notice, and usually it varies pixel by pixel like I guess “white noise” instead of on a larger scale like you’d expect from watercolor

(it also affects the black lines, which starts being really odd)

I guess it isn’t really a 100% proof, but it’s at least 99% as I can’t find a presumed-human made comic that has it, yet every single “looks like AI” comic seems to have it

Could potentially be a compression artefact but I freely admit I’m playing devil’s advocate right now. Do not go down that route we’d end up at function approximation with randomised methods and “well intelligence actually is just compression”.

I actually kind of looked at (jpeg) compression artifacts, and it’s indeed true to the extent that if you compress the image bad enough, it eventually makes it impossible to determine if the color was originally flat or not.

(eg. gif and dithering is a different matter, but it’s very rare these days and you can distinguish it from the “AI noise” by noticing that dithering forms “regular” patterns while “AI noise” is random)

Though from a few tests I did, compression only adds noise to comic style images near “complex geometry”, while removing noise in flat areas. This tracks with my rudimentary understanding of the discrete cosine tranform jpeg uses*, so any comic with a significantly large flat area is detectable as AI based on this method, assuming the compression quality setting is not unreasonably low

*(which should basically be a variant of the fourier transform)

I recreated most of the comic image by hand (using basic line and circle drawing tools, ha) and applied heavy compression. The flat areas remain perfectly flat (as you’d expect as a flat color is easier to compress)

But the AI image reveals a gradient that is invisible to the human eye (incidentally, the original comic does appear heavily jpeg’d, to the point I suspect it could actually be chatgpt adding artificial “fake compression artifacts” by mistake)

there’s also weird “painting” behind the texts which serves no purpose (and why would a human paint almost indistinguishable white on white for no reason?)

the new ai generated comic has less compression, so the noise is much more obvious. There’s still a lot of compression artifacts, but I think those artifacts are there because of the noise, as noise is almost by definition impossible to compress

Perspective.

Nah the arms are in front of the railing.

So what it’s visually balanced. I would shy away from reading surrealist meaning into it but it’s not like humans never make that kind of choice.

The plain fact of the matter is that nowadays it’s often simply impossible to tell, and the people who say “they can always tell” probably never even tried to draw hands or they could distinguish twelve-fingered monstrosities from an artist breaking their pencil in frustration and keeping the resulting line because it’s closer to passing than anything they ever drew before.

I agree that the “arm things” are wrong, as it’s pretty clearly just an ‘artistic choice’ that a human could very much do.

But that said these images are 100% provable to be AI. If you haven’t built up the intuition that immediately tells you it’s AI (it’s fair, most people don’t have unlimited time for looking at AI images), these still have the trademark “subtle texture in flat colors” that basically never shows up in human-made digital art. The blacks aren’t actually perfectly black, but have random noise, and the background color isn’t perfectly uniform, but has random noise.

This is not visible to the human eye but it can be detected with tools, and it’s an artifact caused by how (I believe diffusion) models work

Not using plain RGB black and white isn’t a new thing, neither is randomising. Digital artists might rather go with a uniform watercolour-like background to generate some framing instead of an actual full background but, meh. It’s not a smoking gun by far.

The one argument that does make me think this is AI was someone saying “Yeah the new ChatGPT tends to use that exact colour combination and font”. Could still be a human artist imitating ChatGPT but preponderance of evidence.

I can generally spot SD and SDXL generations but on the flipside I know what I’d need to do to obscure the fact that they were used. The main issue with the bulk AI generations I see floating around isn’t that they’re AI generated, it’s that they were generated by people with not even a hint of an artistic eye. Or vision.

But that doesn’t really matter in this case as this work isn’t about lines on screen, those are just a mechanism to convey a joke about Excel. Could have worked in textual format, the artistry likes with the idea, not in the drawing, or imitation thereof.

The thing missing here is that usually when you do texture, you want to make it visible. The AI ‘watercolor’ is usually extremely subtle, only affecting the 1-2 least significant bits of the color, to the point even with a massive contrast increase it’s hard to notice, and usually it varies pixel by pixel like I guess “white noise” instead of on a larger scale like you’d expect from watercolor

(it also affects the black lines, which starts being really odd)

I guess it isn’t really a 100% proof, but it’s at least 99% as I can’t find a presumed-human made comic that has it, yet every single “looks like AI” comic seems to have it

Could potentially be a compression artefact but I freely admit I’m playing devil’s advocate right now. Do not go down that route we’d end up at function approximation with randomised methods and “well intelligence actually is just compression”.

I actually kind of looked at (jpeg) compression artifacts, and it’s indeed true to the extent that if you compress the image bad enough, it eventually makes it impossible to determine if the color was originally flat or not.

(eg. gif and dithering is a different matter, but it’s very rare these days and you can distinguish it from the “AI noise” by noticing that dithering forms “regular” patterns while “AI noise” is random)

Though from a few tests I did, compression only adds noise to comic style images near “complex geometry”, while removing noise in flat areas. This tracks with my rudimentary understanding of the discrete cosine tranform jpeg uses*, so any comic with a significantly large flat area is detectable as AI based on this method, assuming the compression quality setting is not unreasonably low

*(which should basically be a variant of the fourier transform)

I recreated most of the comic image by hand (using basic line and circle drawing tools, ha) and applied heavy compression. The flat areas remain perfectly flat (as you’d expect as a flat color is easier to compress)

But the AI image reveals a gradient that is invisible to the human eye (incidentally, the original comic does appear heavily jpeg’d, to the point I suspect it could actually be chatgpt adding artificial “fake compression artifacts” by mistake)

there’s also weird “painting” behind the texts which serves no purpose (and why would a human paint almost indistinguishable white on white for no reason?)

the new ai generated comic has less compression, so the noise is much more obvious. There’s still a lot of compression artifacts, but I think those artifacts are there because of the noise, as noise is almost by definition impossible to compress