Last week, Copilot made an unsolicited appearance in Microsoft 365. This week, Apple turned on Apple Intelligence by default in its upcoming operating system releases. And it isn’t easy to get through any of Google’s services without stumbling over Gemini.

Regulators worldwide are keen to ensure that marketing and similar services are opt-in. When dark patterns are used to steer users in one direction or another, lawmakers pay close attention.

But, for some reason, forcing AI on customers is acceptable. Rather than asking “we’re going to shovel a load of AI services into your apps that you never asked for, but our investors really need you to use, is this OK?” the assumption instead is that users will be delighted to see their formerly pristine applications cluttered with AI features.

Customers have not asked for any of this. There has been no clamoring for search summaries, no pent-up demand for the revival of a jumped-up Clippy. There is no desire to wreak further havoc on the environment to get an almost-correct recipe for tomato soup. And yet here we are, ready or not.

Without a choice to opt in, the beatings will continue until AI adoption improves or users find that pesky opt-out option.

For the user data. That’s it. That’s why it exists. That and the dream of replacing some jobs.

I am convinced that everyone really has to make this work one way or another because so goddamn much money was - and still is - being spent on this garbage.

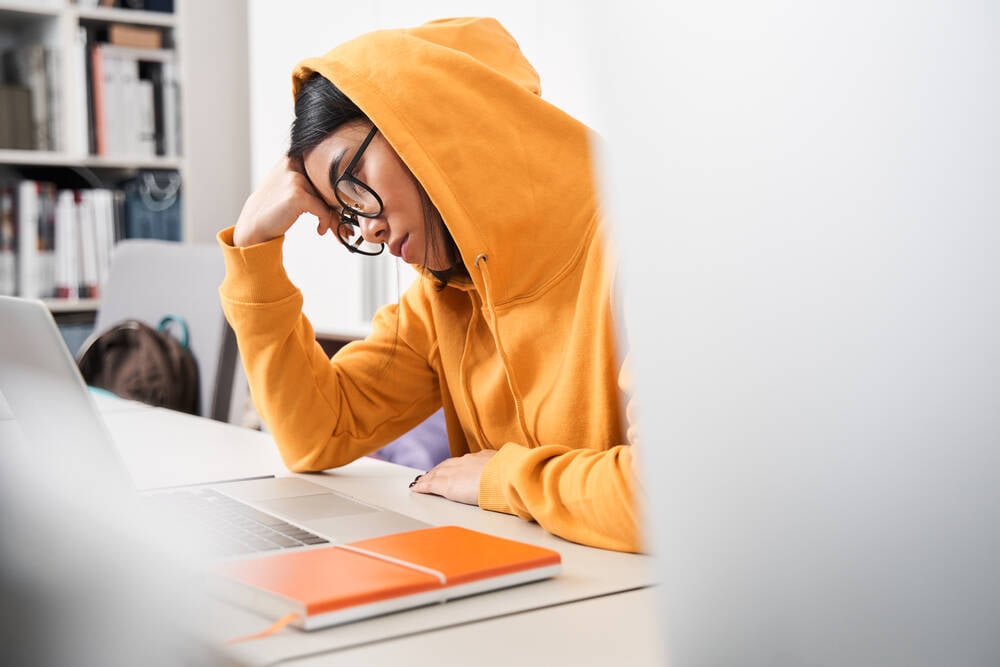

We’re being asked at work “up our CoPilot usage” to justify the license costs. Pretty sad when you need to be forced to use it.

Please refuse and at every opportunity let them know how stupid they are for wasting that money.

Use it and then explain how much of a waste of time it was to get the wrong results.

No, that just plays into their hands. If you complain that it sucks, you’re just “using it wrong”.

Its better to not use it at all so they end up with dogshit engagement metrics and the exec who approved the spend has to explain to the board why they wasted so much money on something their employees clearly don’t want or need to use.

Remember, they won’t show the complaints, just the numbers, so those numbers have to suck if you really want the message to get through.

This! ☝️

Just because you brought up copilot, I think people need to see this

lmao my workplace encourages use / exploration of LLMs when useful, but that’s stupid

Correct. It’s about metrics. They’re making AI opt-out because they desparately need to pump user engagement numbers, even if those numbers don’t mean anything.

It’s all for the shareholders. Big tech has been, for a while now, chasing a new avenue for meteoric growth, because that’s what investors have come to expect. So they went all in on AI, to the tune of billions upon billions, and came crashing, hard, into the reality that consumers don’t need it and enterprise can’t use it;

Transformer models have two fatal flaws; the hallucination problem - to which there is still no solution - makes them unsuitable for enterprise applications, and their cost per operation make them unaffordable for retail customer applications (ie, a chatbot that gives you synonyms while you write is the sort of thing people will happily use, but won’t pay $40 a month for).

So now the C-suites are standing over the edge of the burning trash fire they pushed all that money into, knowing that at any moment their shareholders are going to wake up and shove them into it too. They’ve got to come up with some kind of proof that this investment is paying off. They can’t find that proof in sales, because no one is buying, so instead they’re going to use “engagement”; shove AI into everything, to the point where people basically wind up using it by accident, then use those metrics to claim that everyone loves it. And then pray to God that one of those two fatal flaws will be solved in time to make their investments pay off in the long run.

Yeah, it’s sunk cost fallacy all the way down. We’re just being harvested because…fuck us I guess.

It’s a combination of sunk cost and FOMO.

This is it. “We spent so damn much money on this, we gotta see some NUMBERS on the dashboard!”

They have to put it into everything and have people and apps depend on it before the AI bubble pops so after the pop it’s too difficult to remove or break the dependency. As long as it’s in there they can charge subscription and fees for it.

“coPilot, what is the Sunk Cost Fallacy?”

lmao, who is going to OPT INto the anti-privacy replace-your-job machine?

AI is data hungry. If they opt you in, they also get more data from you automatically.

Even if everyone opts out on day 2, they get a crap ton of free stuff in the meantime.

Because they need a constant stream of data to feed the models. If people had to opt in then they’d be less likely to do so and the models would starve and become less accurate and therefore less valuable to sell. Remember the trained model is the valuable piece of the entire thing, that’s what companies pay money to gain access to. There’s no point in sitting on all that user data if they can’t turn it into a marketable product by feeding it into a model.

Because most people are too lazy and/or stupid to bother up opting out. If they had an authentic metric for demand then they couldn’t trick the share value into going up.

Edit: fixed autocowrecks

lmao when have tech companies ever given a semblance of a shit about consent. It’s an industry that has deep roots in misogynistic nerds and drunk frat bros

what happened to asking permission first?

Masculine energy I guess.At least there’s still an opt out…

Odds on the opt out actually opting you out instead of pretending to?

0 to 1

For now

Yeah, it’s called switching away from these shit-ass invasive products wherever possible.

I tried co-pilot. Once.

I asked it “why does Windows Defender peg my HDD* at 100%”

The reply was “I don’t know, but here are some google searches that might help”

Microsoft’s own co-pilot doesn’t seem to have access to microsoft products, so now I uninstall/deactivate it every opportunity I can.

*yes, a HDD. Not ideal for performance these days, but it’s the last laptop I have with a HDD, and I use it for experiments.

I call bullshit. Because no LLM ever says, “I don’t know.” It just confidently invents an answer out of thin air.

Only mostly facetious here…

I guessed it was the classic “first one comes free, the next one we’ll see”. They are offering AI for free to make people dependent on the technology, once people can’t live without their AI, then corporations will start profiting from subscriptions.

Because very few people are ever going to turn an optional feature on, whether they would ultimately like it or not. They need to be shown it. If they hate it, they will turn it off.

They can be offered to choose.

Opt-out is always bad because it is meant to exploit users who are not aware that a certain feature can be turned off. Even among those who do, not all are confidently going through settings.

It is to circumvent E2E encryption and get all your data. here is a good video that gives a great summary: https://youtu.be/yh1pF1zaauc

I heard Windows was adding a “snapshot” and guessed that’s what they were doing, but uhh… are there really “AI Screens” hardware doing this too?

Not yet on pc, but iPhone 16 has a chip already and the new copilot certified laptops will have it as well. MacOS has the capabilities already with that Daemon mentioned in the video.

The era of surveillance is making huge progress every week.

Data collection. And hoping to stumble upon consistently useful use cases yet-unknown.

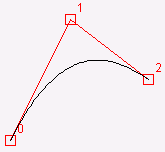

The reason is probably the indexing takes a lot of time. You can’t just turn AI on and expect it to work. You need to turn it on and wait for it to dig through all your data and index it for fast retrieval

It’s kind of funny that even for “dumb search”, Microsoft turned on indexing by default and always made it difficult to disable. However the search was so crappy that there was never a benefit to it.